4.10: Newton’s Method

- Page ID

- 25972

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\dsum}{\displaystyle\sum\limits} \)

\( \newcommand{\dint}{\displaystyle\int\limits} \)

\( \newcommand{\dlim}{\displaystyle\lim\limits} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\(\newcommand{\longvect}{\overrightarrow}\)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)Learning Objectives

- Describe the steps of Newton’s method.

- Explain what an iterative process means.

- Recognize when Newton’s method does not work.

- Apply iterative processes to various situations.

In many areas of pure and applied mathematics, we are interested in finding solutions to an equation of the form \(f(x)=0.\) For most functions, however, it is difficult—if not impossible—to calculate their zeroes explicitly. In this section, we take a look at a technique that provides a very efficient way of approximating the zeroes of functions. This technique makes use of tangent line approximations and is behind the method used often by calculators and computers to find zeroes.

Describing Newton’s Method

Consider the task of finding the solutions of \(f(x)=0.\) If \(f\) is the first-degree polynomial \(f(x)=ax+b\), then the solution of \(f(x)=0\) is given by the formula \(x=−\frac{b}{a}\). If \(f\) is the second-degree polynomial \(f(x)=ax^2+bx+c\), the solutions of \(f(x)=0\) can be found by using the quadratic formula. However, for polynomials of degree 3 or more, finding roots of \(f\) becomes more complicated. Although formulas exist for third- and fourth-degree polynomials, they are quite complicated. Also, if f is a polynomial of degree 5 or greater, it is known that no such formulas exist. For example, consider the function

\[f(x)=x^5+8x^4+4x^3−2x−7.\nonumber\]

No formula exists that allows us to find the solutions of \(f(x)=0.\) Similar difficulties exist for nonpolynomial functions. For example, consider the task of finding solutions of \(tan(x)−x=0.\)No simple formula exists for the solutions of this equation. In cases such as these, we can use Newton’s method to approximate the roots.

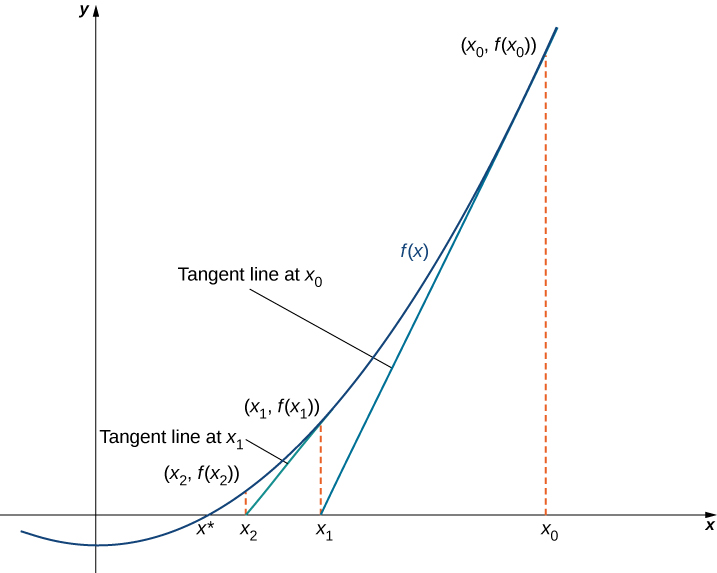

Newton’s method makes use of the following idea to approximate the solutions of \(f(x)=0.\) By sketching a graph of \(f\), we can estimate a root of \(f(x)=0\). Let’s call this estimate \(x_0\). We then draw the tangent line to \(f\) at \(x_0\). If \(f′(x_0)≠0\), this tangent line intersects the \(x\)-axis at some point \((x_1,0)\). Now let \(x_1\) be the next approximation to the actual root. Typically, \(x_1\) is closer than \(x_0\) to an actual root. Next we draw the tangent line to \(f\) at \(x_1\). If \(f′(x_1)≠0\), this tangent line also intersects the \(x\)-axis, producing another approximation, \(x_2\). We continue in this way, deriving a list of approximations: \(x_0,\, x_1,\, x_2,\, ….\) Typically, the numbers \(x_0,\, x_1,\, x_2,\, …\) quickly approach an actual root \(x*\), as shown in the following figure.

Now let’s look at how to calculate the approximations \(x_0,\, x_1,\, x_2,\, ….\) If \(x_0\) is our first approximation, the approximation \(x_1\) is defined by letting \((x_1,0)\) be the \(x\)-intercept of the tangent line to \(f\) at \(x_0\). The equation of this tangent line is given by

\[y=f(x_0)+f′(x_0)(x−x_0). \nonumber\]

Therefore, \(x_1\) must satisfy

\[f(x_0)+f′(x_0)(x_1−x_0)=0.\nonumber\]

Solving this equation for \(x_1\), we conclude that

\[x_1=x_0−\frac{f(x_0)}{f'(x_0)}.\nonumber\]

Similarly, the point \((x_2,0)\) is the \(x\)-intercept of the tangent line to \(f\) at \(x_1\). Therefore, \(x_2\) satisfies the equation

\[x_2=x_1−\frac{f(x_1)}{f'(x_1)}.\nonumber\]

In general, for \(n>0,x_n\) satisfies

\[x_n=x_{n−1}−\frac{f(x_{n−1})}{f'(x_{n−1})}.\label{Newton}\]

Next we see how to make use of this technique to approximate the root of the polynomial \(f(x)=x^3−3x+1.\)

Example \(\PageIndex{1}\): Finding a Root of a Polynomial

Use Newton’s method to approximate a root of \(f(x)=x^3−3x+1\) in the interval \([1,2]\). Let \(x_0=2\) and find \(x_1,\, x_2, \,x_3, \,x_4,\) and \(x_5\).

Solution

From Figure \(\PageIndex{2}\), we see that \(f\) has one root over the interval \((1,2)\). Therefore \(x_0=2\) seems like a reasonable first approximation. To find the next approximation, we use Equation \ref{Newton}. Since \(f(x)=x^3−3x+1\), the derivative is \(f′(x)=3x^2−3\). Using Equation \ref{Newton} with \(n=1\) (and a calculator that displays \(10\) digits), we obtain

\[x_1=x_0−\frac{f(x_0)}{f'(x_0)}=2−\frac{f(2)}{f'(2)}=2−\frac{3}{9}≈1.666666667.\nonumber\]

To find the next approximation, \(x_2\), we use Equation with \(n=2\) and the value of \(x_1\) stored on the calculator. We find that

\[x_2=x_1-\frac{f(x_1)}{f'(x_1)}≈1.548611111.\nonumber\]

Continuing in this way, we obtain the following results:

- \(x_1≈1.666666667\)

- \(x_2≈1.548611111\)

- \(x_3≈1.532390162\)

- \(x_4≈1.532088989\)

- \(x_5≈1.532088886\)

- \(x_6≈1.532088886.\)

We note that we obtained the same value for \(x_5\) and \(x_6\). Therefore, any subsequent application of Newton’s method will most likely give the same value for \(x_n\).

Exercise \(\PageIndex{1}\)

Letting \(x_0=0\), let’s use Newton’s method to approximate the root of \(f(x)=x^3−3x+1\) over the interval \([0,1]\) by calculating \(x_1\) and \(x_2\).

- Hint

-

Use Equation \ref{Newton}.

- Answer

-

\(x_1≈0.33333333\)

\(x_2≈0.347222222\)

Newton’s method can also be used to approximate square roots. Here we show how to approximate \(\sqrt{2}\). This method can be modified to approximate the square root of any positive number.

Example \(\PageIndex{2}\): Finding a Square Root

Use Newton’s method to approximate \(\sqrt{2}\) (Figure \(\PageIndex{3}\)). Let \(f(x)=x^2−2\), let \(x_0=2\), and calculate \(x_1,\, x_2,\, x_3,\, x_4,\, x_5\). (We note that since \(f(x)=x^2−2\) has a zero at \(\sqrt{2}\), the initial value \(x_0=2\) is a reasonable choice to approximate \(\sqrt{2}\)).

Solution

For \(f(x)=x^2−2,\; f′(x)=2x.\) From \ref{Newton}, we know that

\[\begin{align*} x_n&=x_{n−1}−\frac{f(x_{n−1})}{f'(x_{n−1})}\\[4pt]

&=x_{n−1}−\frac{x^2_{n−1}−2}{2x_{n−1}}\\[4pt]

&=\frac{1}{2}x_{n−1}+\frac{1}{x_{n−1}}\\[4pt]

&=\frac{1}{2}\left(x_{n−1}+\frac{2}{x_{n−1}}\right).\end{align*} \]

Therefore,

\(x_1=\frac{1}{2}\left(x_0+\frac{2}{x_0}\right)=\frac{1}{2}\left(2+\frac{2}{2}\right)=1.5\)

\(x_2=\frac{1}{2}\left(x_1+\frac{2}{x_1}\right)=\frac{1}{2}\left(1.5+\frac{2}{1.5}\right)≈1.416666667.\)

Continuing in this way, we find that

\(x_1=1.5\)

\(x_2≈1.416666667\)

\(x_3≈1.414215686\)

\(x_4≈1.414213562\)

\(x_5≈1.414213562.\)

Since we obtained the same value for \(x_4\) and \(x_5\), it is unlikely that the value \(x_n\) will change on any subsequent application of Newton’s method. We conclude that \(\sqrt{2}≈1.414213562.\)

Exercise \(\PageIndex{2}\)

Use Newton’s method to approximate \(\sqrt{3}\) by letting \(f(x)=x^2−3\) and \(x_0=3\). Find \(x_1\) and \(x_2\).

- Hint

-

For \(f(x)=x^2−3\), Equation \ref{Newton} reduces to \(x_n=\frac{x_{n−1}}{2}+\frac{3}{2x_{n−1}}\).

- Answer

-

\(x_1=2\)

\(x_2=1.75\)

When using Newton’s method, each approximation after the initial guess is defined in terms of the previous approximation by using the same formula. In particular, by defining the function \(F(x)=x−\left[\frac{f(x)}{f′(x)}\right]\), we can rewrite Equation \ref{Newton} as \(x_n=F(x_{n−1})\). This type of process, where each \(x_n\) is defined in terms of \(x_{n−1}\) by repeating the same function, is an example of an iterative process. Shortly, we examine other iterative processes. First, let’s look at the reasons why Newton’s method could fail to find a root.

Failures of Newton’s Method

Typically, Newton’s method is used to find roots fairly quickly. However, things can go wrong. Some reasons why Newton’s method might fail include the following:

- At one of the approximations \(x_n\), the derivative \(f′\) is zero at \(x_n\), but \(f(x_n)≠0\). As a result, the tangent line of \(f\) at \(x_n\) does not intersect the \(x\)-axis. Therefore, we cannot continue the iterative process.

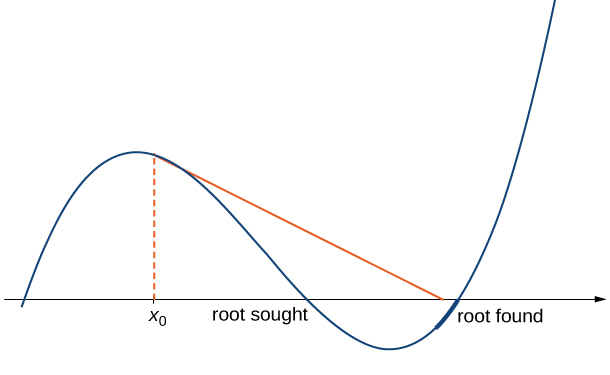

- The approximations \(x_0,\, x_1,\, x_2,\, …\) may approach a different root. If the function \(f\) has more than one root, it is possible that our approximations do not approach the one for which we are looking, but approach a different root (see Figure \(\PageIndex{4}\)). This event most often occurs when we do not choose the approximation \(x_0\) close enough to the desired root.

- The approximations may fail to approach a root entirely. In Example \(\PageIndex{3}\), we provide an example of a function and an initial guess \(x_0\) such that the successive approximations never approach a root because the successive approximations continue to alternate back and forth between two values.

Example \(\PageIndex{3}\): When Newton’s Method Fails

Consider the function \(f(x)=x^3−2x+2\). Let \(x_0=0\). Show that the sequence \(x_1,\, x_2,\, …\) fails to approach a root of \(f\).

Solution

For \(f(x)=x^3−2x+2,\) the derivative is \(f′(x)=3x^2−2\).Therefore,

\[x_1=x_0−\frac{f(x_0)}{f′(x_0)}=0−\frac{f(0)}{f′(0)}=−\frac{2}{−2}=1. \nonumber\]

In the next step,

\[x_2=x_1−\frac{f(x_1)}{f'(x_1)}=1−\frac{f(1)}{f′(1)}=1−\frac{1}{1}=0. \nonumber\]

Consequently, the numbers \(x_0,\, x_1,\, x_2,\, …\) continue to bounce back and forth between \(0\) and \(1\) and never get closer to the root of \(f\) which is over the interval \([−2,−1]\) (Figure \(\PageIndex{5}\)). Fortunately, if we choose an initial approximation \(x_0\) closer to the actual root, we can avoid this situation.

Exercise \(\PageIndex{3}\)

For \(f(x)=x^3−2x+2,\) let \(x_0=−1.5\) and find \(x_1\) and \(x_2\).

- Hint

-

Use Equation \ref{Newton}.

- Answer

-

\(x_1≈−1.842105263\)

\(x_2≈−1.772826920\)

From Example \(\PageIndex{3}\), we see that Newton’s method does not always work. However, when it does work, the sequence of approximations approaches the root very quickly. Discussions of how quickly the sequence of approximations approach a root found using Newton’s method are included in texts on numerical analysis.

Other Iterative Processes

As mentioned earlier, Newton’s method is a type of iterative process. We now look at an example of a different type of iterative process.

Consider a function \(F\) and an initial number \(x_0\). Define the subsequent numbers \(x_n\) by the formula \(x_n=F(x_{n−1})\). This process is an iterative process that creates a list of numbers \(x_0,\, x_1,\, x_2,\, …,\, x_n,\, ….\) This list of numbers may approach a finite number \(x^*\) as \(n\) gets larger, or it may not. In Example \(\PageIndex{4}\), we see an example of a function \(F\) and an initial guess \(x_0\) such that the resulting list of numbers approaches a finite value.

Example \(\PageIndex{4}\): Finding a Limit for an Iterative Process

Let \(F(x)=\frac{1}{2}x+4\) and let \(x_0=0\). For all \(n≥1\), let \(x_n=F(x_{n−1})\). Find the values \(x_1,\, x_2,\, x_3,\, x_4,\, x_5\). Make a conjecture about what happens to this list of numbers \(x_1,\, x_2,\, x_3,\, …,\, x_n,\, …\) as \(n→∞\). If the list of numbers \(x_1,\, x_2,\, x_3,\, …\) approaches a finite number \(x^*\), then \(x^*\) satisfies \(x^*=F(x^*)\), and \(x^*\) is called a fixed point of \(F\).

Solution

If \(x_0=0\), then

- \(x_1=\frac{1}{2}(0)+4=4\)

- \(x_2=\frac{1}{2}(4)+4=6\)

- \(x_3=\frac{1}{2}(6)+4=7\)

- \(x_4=\frac{1}{2}(7)+4=7.5\)

- \(x_5=\frac{1}{2}(7.5)+4=7.75\)

- \(x_6=\frac{1}{2}(7.75)+4=7.875\)

- \(x_7=\frac{1}{2}(7.875)+4=7.9375\)

- \(x_8=\frac{1}{2}(7.9375)+4=7.96875\)

- \(x _9=\frac{1}{2}(7.96875)+4=7.984375.\)

From this list, we conjecture that the values \(x_n\) approach \(8\).

Figure \(\PageIndex{6}\) provides a graphical argument that the values approach \(8\) as \(n→∞\). Starting at the point \((x_0,x_0)\), we draw a vertical line to the point \((x_0,F(x_0))\). The next number in our list is \(x_1=F(x_0)\). We use \(x_1\) to calculate \(x_2\). Therefore, we draw a horizontal line connecting \((x_0,x_1)\) to the point \((x_1,x_1)\) on the line \(y=x\), and then draw a vertical line connecting \((x_1,x_1)\) to the point \((x_1,F(x_1))\). The output \(F(x_1)\) becomes \(x_2\). Continuing in this way, we could create an infinite number of line segments. These line segments are trapped between the lines \(F(x)=\frac{x}{2}+4\) and \(y=x\). The line segments get closer to the intersection point of these two lines, which occurs when \(x=F(x)\). Solving the equation \(x=\frac{x}{2}+4,\) we conclude they intersect at \(x=8\). Therefore, our graphical evidence agrees with our numerical evidence that the list of numbers \(x_0,\, x_1,\, x_2,\, …\) approaches \(x*=8\) as \(n→∞\).

Exercise \(\PageIndex{4}\)

Consider the function \(F(x)=\frac{1}{3}x+6\). Let \(x_0=0\) and let \(x_n=F(x_{n−1})\) for \(n≥2\). Find \(x_1,\, x_2,\, x_3,\, x_4,\, x_5\). Make a conjecture about what happens to the list of numbers \(x_1,\, x_2,\, x_3,\, …\, x_n,\, … \) as \(n→∞.\)

- Hint

-

Consider the point where the lines \(y=x\) and \(y=F(x)\) intersect.

- Answer

-

\(x_1=6,\;x_2=8,\;x_3=\frac{26}{3},\;x_4=\frac{80}{9},\;x_5=\frac{242}{27};\;x^*=9\)

Iterative Processes and Chaos

Iterative processes can yield some very interesting behavior. In this section, we have seen several examples of iterative processes that converge to a fixed point. We also saw in Example \(\PageIndex{4}\) that the iterative process bounced back and forth between two values. We call this kind of behavior a 2-cycle. Iterative processes can converge to cycles with various periodicities, such as 2−cycles, 4−cycles (where the iterative process repeats a sequence of four values), 8-cycles, and so on.

Some iterative processes yield what mathematicians call chaos. In this case, the iterative process jumps from value to value in a seemingly random fashion and never converges or settles into a cycle. Although a complete exploration of chaos is beyond the scope of this text, in this project we look at one of the key properties of a chaotic iterative process: sensitive dependence on initial conditions. This property refers to the concept that small changes in initial conditions can generate drastically different behavior in the iterative process.

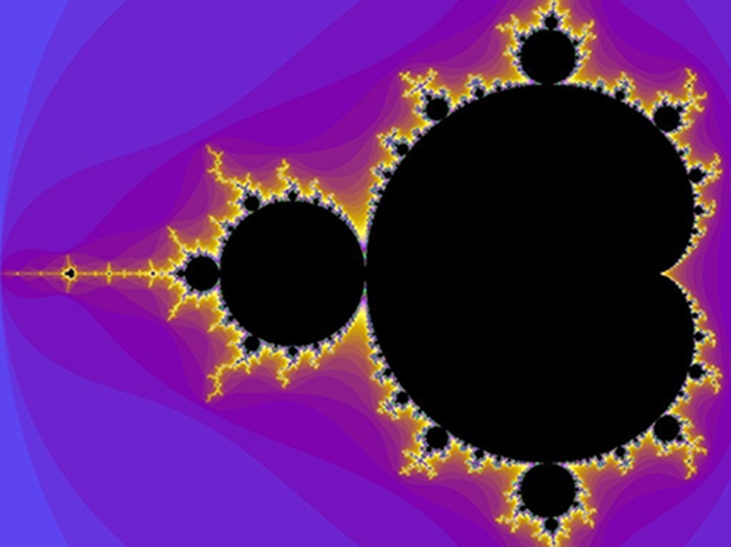

Probably the best-known example of chaos is the Mandelbrot set (see Figure), named after Benoit Mandelbrot (1924–2010), who investigated its properties and helped popularize the field of chaos theory. The Mandelbrot set is usually generated by computer and shows fascinating details on enlargement, including self-replication of the set. Several colorized versions of the set have been shown in museums and can be found online and in popular books on the subject.

In this project we use the logistic map

\[f(x)=rx(1−x)\]

where \(x∈[0,1]\) and \(r>0\)

as the function in our iterative process. The logistic map is a deceptively simple function; but, depending on the value of \(r\), the resulting iterative process displays some very interesting behavior. It can lead to fixed points, cycles, and even chaos.

To visualize the long-term behavior of the iterative process associated with the logistic map, we will use a tool called a cobweb diagram. As we did with the iterative process we examined earlier in this section, we first draw a vertical line from the point \((x_0,0)\) to the point \((x_0,f(x_0))=(x_0,x_1)\). We then draw a horizontal line from that point to the point \((x_1,x_1),\) then draw a vertical line to \((x_1,f(x_1))=(x_1,x_2)\), and continue the process until the long-term behavior of the system becomes apparent. Figure shows the long-term behavior of the logistic map when \(r=3.55\) and \(x_0=0.2\). (The first \(100\) iterations are not plotted.) The long-term behavior of this iterative process is an \(8\)-cycle.

- Let \(r=0.5\) and choose \(x_0=0.2\). Either by hand or by using a computer, calculate the first \(10\) values in the sequence. Does the sequence appear to converge? If so, to what value? Does it result in a cycle? If so, what kind of cycle (for example, \(2\)−cycle, \(4\)−cycle.)?

- What happens when \(r=2\)?

- For \(r=3.2\) and \(r=3.5\), calculate the first \(100\) sequence values. Generate a cobweb diagram for each iterative process. (Several free applets are available online that generate cobweb diagrams for the logistic map.) What is the long-term behavior in each of these cases?

- Now let \(r=4.\) Calculate the first \(100\) sequence values and generate a cobweb diagram. What is the long-term behavior in this case?

- Repeat the process for \(r=4,\) but let \(x_0=0.201.\) How does this behavior compare with the behavior for \(x_0=0.2\)?

Key Concepts

- Newton’s method approximates roots of \(f(x)=0\) by starting with an initial approximation \(x_0\), then uses tangent lines to the graph of \(f\) to create a sequence of approximations \(x_1,\, x_2,\, x_3,\, ….\)

- Typically, Newton’s method is an efficient method for finding a particular root. In certain cases, Newton’s method fails to work because the list of numbers \(x_0,\, x_1,\, x_2,\, …\) does not approach a finite value or it approaches a value other than the root sought.

- Any process in which a list of numbers \(x_0,\, x_1,\, x_2,\, …\) is generated by defining an initial number \(x_0\) and defining the subsequent numbers by the equation \(x_n=F(x_{n−1})\) for some function \(F\) is an iterative process. Newton’s method is an example of an iterative process, where the function \(F(x)=x−\left[\frac{f(x)}{f′(x)}\right]\) for a given function \(f\).

Glossary

- iterative process

- process in which a list of numbers \(x_0,x_1,x_2,x_3…\) is generated by starting with a number \(x_0\) and defining \(x_n=F(x_{n−1})\) for \(n≥1\)

- Newton’s method

- method for approximating roots of \(f(x)=0;\) using an initial guess \(x_0\); each subsequent approximation is defined by the equation \(x_n=x_{n−1}−\frac{f(x_{n−1})}{f'(x_{n−1})}\)