10.4: Estimating Population Parameters

- Page ID

- 4003

In all the IQ examples in the previous sections, we actually knew the population parameters ahead of time. As every undergraduate gets taught in their very first lecture on the measurement of intelligence, IQ scores are defined to have mean 100 and standard deviation 15. However, this is a bit of a lie. How do we know that IQ scores have a true population mean of 100? Well, we know this because the people who designed the tests have administered them to very large samples, and have then “rigged” the scoring rules so that their sample has mean 100. That’s not a bad thing of course: it’s an important part of designing a psychological measurement. However, it’s important to keep in mind that this theoretical mean of 100 only attaches to the population that the test designers used to design the tests. Good test designers will actually go to some lengths to provide “test norms” that can apply to lots of different populations (e.g., different age groups, nationalities etc).

This is very handy, but of course almost every research project of interest involves looking at a different population of people to those used in the test norms. For instance, suppose you wanted to measure the effect of low level lead poisoning on cognitive functioning in Port Pirie, a South Australian industrial town with a lead smelter. Perhaps you decide that you want to compare IQ scores among people in Port Pirie to a comparable sample in Whyalla, a South Australian industrial town with a steel refinery.151 Regardless of which town you’re thinking about, it doesn’t make a lot of sense simply to assume that the true population mean IQ is 100. No-one has, to my knowledge, produced sensible norming data that can automatically be applied to South Australian industrial towns. We’re going to have to estimate the population parameters from a sample of data. So how do we do this?

Estimating the population mean

Suppose we go to Port Pirie and 100 of the locals are kind enough to sit through an IQ test. The average IQ score among these people turns out to be \(\bar{X}\) =98.5. So what is the true mean IQ for the entire population of Port Pirie? Obviously, we don’t know the answer to that question. It could be 97.2, but if could also be 103.5. Our sampling isn’t exhaustive so we cannot give a definitive answer. Nevertheless if I was forced at gunpoint to give a “best guess” I’d have to say 98.5. That’s the essence of statistical estimation: giving a best guess.

In this example, estimating the unknown poulation parameter is straightforward. I calculate the sample mean, and I use that as my estimate of the population mean. It’s pretty simple, and in the next section I’ll explain the statistical justification for this intuitive answer. However, for the moment what I want to do is make sure you recognise that the sample statistic and the estimate of the population parameter are conceptually different things. A sample statistic is a description of your data, whereas the estimate is a guess about the population. With that in mind, statisticians often different notation to refer to them. For instance, if true population mean is denoted μ, then we would use \(\hat{\mu}\) to refer to our estimate of the population mean. In contrast, the sample mean is denoted \(\bar{X}\) or sometimes m. However, in simple random samples, the estimate of the population mean is identical to the sample mean: if I observe a sample mean of \(\bar{X}\) =98.5, then my estimate of the population mean is also \(\hat{\mu}\)=98.5. To help keep the notation clear, here’s a handy table:

knitr::kable(data.frame(stringsAsFactors=FALSE,

Symbol = c("$\\bar{X}$", "$\\mu$", "$\\hat{\\mu}$"),

What.is.it = c("Sample mean", "True population mean",

"Estimate of the population mean"),

Do.we.know.what.it.is = c("Yes calculated from the raw data",

"Almost never known for sure",

"Yes identical to the sample mean")))| Symbol | What.is.it | Do.we.know.what.it.is |

|---|---|---|

| \(\bar{X}\) | Sample mean | Yes calculated from the raw data |

| μ | True population mean | Almost never known for sure |

| \(\hat{\mu}\) | Estimate of the population mean | Yes identical to the sample mean |

Estimating the population standard deviation

So far, estimation seems pretty simple, and you might be wondering why I forced you to read through all that stuff about sampling theory. In the case of the mean, our estimate of the population parameter (i.e. \(\hat{\mu}\) ) turned out to identical to the corresponding sample statistic (i.e. \(\bar{X}\)). However, that’s not always true. To see this, let’s have a think about how to construct an estimate of the population standard deviation, which we’ll denote \(\hat{\sigma}\). What shall we use as our estimate in this case? Your first thought might be that we could do the same thing we did when estimating the mean, and just use the sample statistic as our estimate. That’s almost the right thing to do, but not quite.

Here’s why. Suppose I have a sample that contains a single observation. For this example, it helps to consider a sample where you have no intutions at all about what the true population values might be, so let’s use something completely fictitious. Suppose the observation in question measures the cromulence of my shoes. It turns out that my shoes have a cromulence of 20. So here’s my sample:

20This is a perfectly legitimate sample, even if it does have a sample size of N=1. It has a sample mean of 20, and because every observation in this sample is equal to the sample mean (obviously!) it has a sample standard deviation of 0. As a description of the sample this seems quite right: the sample contains a single observation and therefore there is no variation observed within the sample. A sample standard deviation of s=0 is the right answer here. But as an estimate of the population standard deviation, it feels completely insane, right? Admittedly, you and I don’t know anything at all about what “cromulence” is, but we know something about data: the only reason that we don’t see any variability in the sample is that the sample is too small to display any variation! So, if you have a sample size of N=1, it feels like the right answer is just to say “no idea at all”.

Notice that you don’t have the same intuition when it comes to the sample mean and the population mean. If forced to make a best guess about the population mean, it doesn’t feel completely insane to guess that the population mean is 20. Sure, you probably wouldn’t feel very confident in that guess, because you have only the one observation to work with, but it’s still the best guess you can make.

Let’s extend this example a little. Suppose I now make a second observation. My data set now has N=2 observations of the cromulence of shoes, and the complete sample now looks like this:

20, 22This time around, our sample is just large enough for us to be able to observe some variability: two observations is the bare minimum number needed for any variability to be observed! For our new data set, the sample mean is \(\bar{X}\) =21, and the sample standard deviation is s=1. What intuitions do we have about the population? Again, as far as the population mean goes, the best guess we can possibly make is the sample mean: if forced to guess, we’d probably guess that the population mean cromulence is 21. What about the standard deviation? This is a little more complicated. The sample standard deviation is only based on two observations, and if you’re at all like me you probably have the intuition that, with only two observations, we haven’t given the population “enough of a chance” to reveal its true variability to us. It’s not just that we suspect that the estimate is wrong: after all, with only two observations we expect it to be wrong to some degree. The worry is that the error is systematic. Specifically, we suspect that the sample standard deviation is likely to be smaller than the population standard deviation.

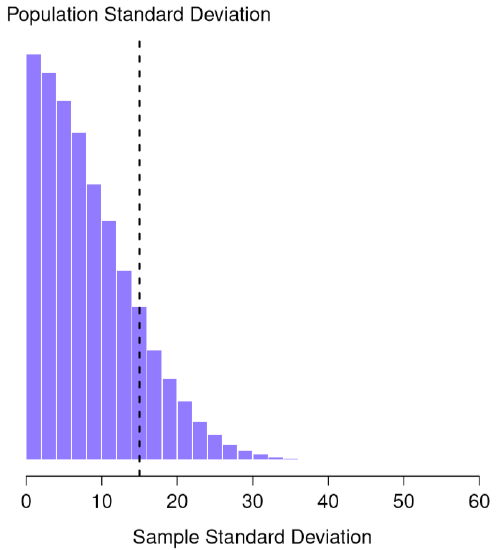

This intuition feels right, but it would be nice to demonstrate this somehow. There are in fact mathematical proofs that confirm this intuition, but unless you have the right mathematical background they don’t help very much. Instead, what I’ll do is use R to simulate the results of some experiments. With that in mind, let’s return to our IQ studies. Suppose the true population mean IQ is 100 and the standard deviation is 15. I can use the rnorm() function to generate the the results of an experiment in which I measure N=2 IQ scores, and calculate the sample standard deviation. If I do this over and over again, and plot a histogram of these sample standard deviations, what I have is the sampling distribution of the standard deviation. I’ve plotted this distribution in Figure 10.11. Even though the true population standard deviation is 15, the average of the sample standard deviations is only 8.5. Notice that this is a very different result to what we found in Figure 10.8 when we plotted the sampling distribution of the mean. If you look at that sampling distribution, what you see is that the population mean is 100, and the average of the sample means is also 100.

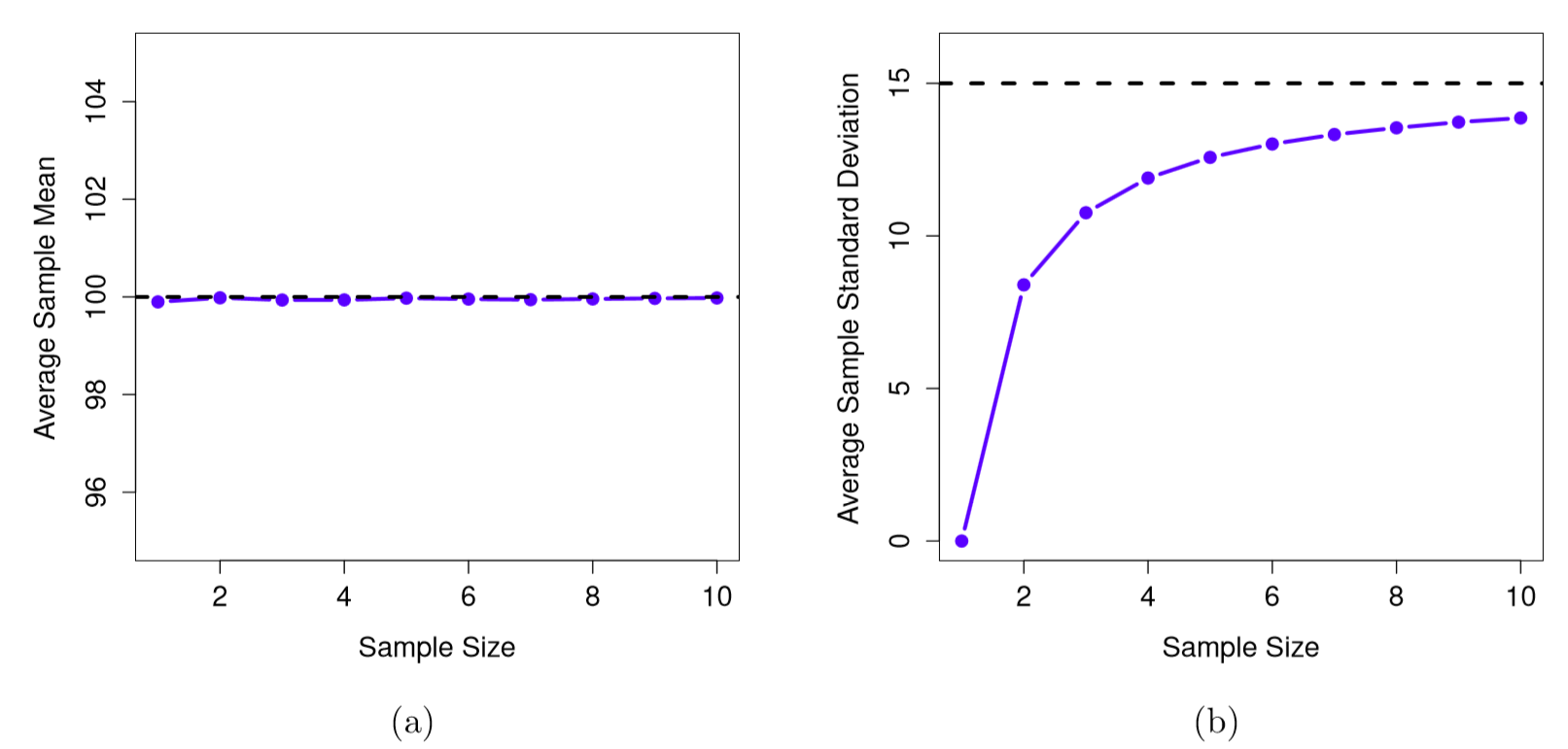

Now let’s extend the simulation. Instead of restricting ourselves to the situation where we have a sample size of N=2, let’s repeat the exercise for sample sizes from 1 to 10. If we plot the average sample mean and average sample standard deviation as a function of sample size, you get the results shown in Figure 10.12. On the left hand side (panel a), I’ve plotted the average sample mean and on the right hand side (panel b), I’ve plotted the average standard deviation. The two plots are quite different: on average, the average sample mean is equal to the population mean. It is an unbiased estimator, which is essentially the reason why your best estimate for the population mean is the sample mean.152 The plot on the right is quite different: on average, the sample standard deviation s is smaller than the population standard deviation σ. It is a biased estimator. In other words, if we want to make a “best guess” \(\hat{\sigma}\) about the value of the population standard deviation σ, we should make sure our guess is a little bit larger than the sample standard deviation s.

The fix to this systematic bias turns out to be very simple. Here’s how it works. Before tackling the standard deviation, let’s look at the variance. If you recall from Section 5.2, the sample variance is defined to be the average of the squared deviations from the sample mean. That is:

\(s^{2}=\dfrac{1}{N} \sum_{i=1}^{N}\left(X_{i}-\bar{X}\right)^{2}\)

The sample variance s2 is a biased estimator of the population variance σ2. But as it turns out, we only need to make a tiny tweak to transform this into an unbiased estimator. All we have to do is divide by N−1 rather than by N. If we do that, we obtain the following formula:

\(\hat{\sigma}\ ^{2}=\dfrac{1}{N-1} \sum_{i=1}^{N}\left(X_{i}-\bar{X}\right)^{2}\)

This is an unbiased estimator of the population variance σ. Moreover, this finally answers the question we raised in Section 5.2. Why did R give us slightly different answers when we used the var() function? Because the var() function calculates \(\hat{\sigma}\ ^{2}\) not s2, that’s why. A similar story applies for the standard deviation. If we divide by N−1 rather than N, our estimate of the population standard deviation becomes:

\(\hat{\sigma}=\sqrt{\dfrac{1}{N-1} \sum_{i=1}^{N}\left(X_{i}-\bar{X}\right)^{2}}\)

and when we use R’s built in standard deviation function sd(), what it’s doing is calculating \(\hat{σ}\), not s.153

One final point: in practice, a lot of people tend to refer to \(\hat{σ}\) (i.e., the formula where we divide by N−1) as the sample standard deviation. Technically, this is incorrect: the sample standard deviation should be equal to s (i.e., the formula where we divide by N). These aren’t the same thing, either conceptually or numerically. One is a property of the sample, the other is an estimated characteristic of the population. However, in almost every real life application, what we actually care about is the estimate of the population parameter, and so people always report \(\hat{σ}\) rather than s. This is the right number to report, of course, it’s that people tend to get a little bit imprecise about terminology when they write it up, because “sample standard deviation” is shorter than “estimated population standard deviation”. It’s no big deal, and in practice I do the same thing everyone else does. Nevertheless, I think it’s important to keep the two concepts separate: it’s never a good idea to confuse “known properties of your sample” with “guesses about the population from which it came”. The moment you start thinking that s and \(\hat{σ}\) are the same thing, you start doing exactly that.

To finish this section off, here’s another couple of tables to help keep things clear:

knitr::kable(data.frame(stringsAsFactors=FALSE,

Symbol = c("$s$", "$\\sigma$", "$\\hat{\\sigma}$", "$s^2$",

"$\\sigma^2$", "$\\hat{\\sigma}^2$"),

What.is.it = c("Sample standard deviation",

"Population standard deviation",

"Estimate of the population standard deviation", "Sample variance",

"Population variance",

"Estimate of the population variance"),

Do.we.know.what.it.is = c("Yes - calculated from the raw data",

"Almost never known for sure",

"Yes - but not the same as the sample standard deviation",

"Yes - calculated from the raw data",

"Almost never known for sure",

"Yes - but not the same as the sample variance")

))| Symbol | What.is.it | Do.we.know.what.it.is |

|---|---|---|

| s | Sample standard deviation | Yes - calculated from the raw data |

| σ | Population standard deviation | Almost never known for sure |

| \(\hat{σ}\) | Estimate of the population standard deviation | Yes - but not the same as the sample standard deviation |

| s2 | Sample variance | Yes - calculated from the raw data |

| σ2 | Population variance | Almost never known for sure |

| \(\hat{\sigma}\ ^{2}\) | Estimate of the population variance | Yes - but not the same as the sample variance |